Understanding the Brain of AI – in 10 Minutes

Large Language Models: How an AI Model Understands Our Language

The Tokenizer: How AI Models Translate Text into Numbers

Embeddings: How AI understands the meaning of words

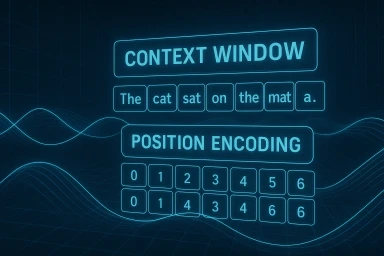

Context window & positional encoding in the transformer

Self-Attention – The Heart of Modern AI

Self-Attention decoded – how transformers really think

© 2025 Oskar Kohler. Alle Rechte vorbehalten.Hinweis: Der Text wurde manuell vom Autor verfasst. Stilistische Optimierungen, Übersetzungen sowie einzelne Tabellen, Diagramme und Abbildungen wurden mit Unterstützung von KI-Tools vorgenommen.